The purpose of the article is to explain default configurations (which can cause bottleneck) at Node.js, Apache, and OS level and how to change those configurations. Any configuration listed below in the diagram can affect client request processing time.

In the below example, the Node.js server is running behind a reverse proxy server like Apache. And Process Manager PM2 is also used to load balance Node.js applications.

NodeJS

Concurrent Requests

By default how many concurrent requests can a Node.js handle?

Since JavaScript execution in Node.js is single-threaded, at a time, one request will be executed but by doing asynchronous operations and using event loop, at a given point of time, Node.js will be handling many requests.

Every connection to/from the node server needs a socket to be created by the kernel. In Unix-based systems, any I/O operation (socket, accept, read, write, etc.) done by a process needs a file descriptor. By default, each process has a limit on how many file descriptors it can create. Below are limits on a Node.js server process.

➜ ~ ps -ef | grep node | grep -v grep | awk '{print $2}' | xargs -I % sh -c 'printf "\nNode PID %\n";cat

/proc/%/limits'

Node PID 26782

Limit Soft Limit Hard Limit Units

Max cpu time unlimited unlimited seconds

Max file size unlimited unlimited bytes

Max data size unlimited unlimited bytes

Max stack size 8388608 unlimited bytes

Max core file size 0 unlimited bytes

Max resident set unlimited unlimited bytes

Max processes 93348 93348 processes

Max open files 16384 16384 files

Max locked memory 65536 65536 bytes

Max address space unlimited unlimited bytes

Max file locks unlimited unlimited locks

Max pending signals 93348 93348 signals

Max msgqueue size 819200 819200 bytes

Max nice priority 0 0

Max realtime priority 0 0

Max realtime timeout unlimited unlimited us

The node process can create 16384 file descriptors per the above limits. To know how many file descriptors got created by each Node.js process running with PM2.

➜ ~ ps -ef | grep node | grep -v grep | awk '{print $2}' | xargs -I % sh -c 'printf "Node PID % - "; sudo ls -1

/proc/%/fd | wc -l'

Node PID 26782 - 42

Node PID 26787 - 46

Node PID 26794 - 41

Node PID 26799 - 42

Node PID 26806 - 42

Node PID 26811 - 38

Node PID 26818 - 38

Node PID 26828 - 44

If this (16384) number is reached for a Node.js process, then — when a Node.js process requests to create a new file descriptor to the kernel, the kernel will through EMFILE (EMFILE The per-process limit on the number of open file descriptors has been reached.) and Node.js will throw

Error: EMFILE, too many open files

To fix this, we can increase the limit add the below line at the end of the file /etc/security/limits.conf

* - nofile 20000

Note: If you want to add based on user please note that the process (PM2/node) should start with below user.

username soft nofile 20000

username hard nofile 20000

There is system-wide limit on the number of file descriptors to be created

➜ ~ more /proc/sys/fs/file-max

2367356

To know currently system-wide how many descriptors got created

➜ ~ more /proc/sys/fs/file-nr | awk '{print $1}'

1888

To test various configurations on Node.js and Apache, below blocking and non-blocking request methods are used.

const express = require('express');

const app = express();

let count = 0;

app.get('/', (req, res) => res.send('Hello, World'));

app.get('/block', (req, res) => {

count = count + 1;

console.log('got request block', count);

const end = Date.now() + 500000;

while (Date.now() < end) {

const doSomethingHeavyInJavaScript = 1 + 2 + 3;

}

res.send('I am done!');

});

app.get('/non-block', (req, res) => {

// Imagine that setTimeout is IO operation

// setTimeout is a native implementation, not the JS

count = count + 1;

console.log('got request non-block', count);

setTimeout(() => res.send('I am done!'), 500000);

});

const server = app.listen(8443, () => console.log('app listening on port 8443'));

server.setTimeout(5000000);

And you can also refer below Apache proxy configuration (httpd.conf) used to route requests to Node.js

ServerRoot "/etc/httpd"

Listen 80

Include conf.modules.d/*.conf

User apache

Group apache

ServerAdmin root@localhost

<Directory />

AllowOverride none

Require all denied

</Directory>

DocumentRoot "/var/www/html"

<Directory "/var/www">

AllowOverride None

Require all granted

</Directory>

<Directory "/var/www/html">

Options Indexes FollowSymLinks

AllowOverride None

Require all granted

</Directory>

<IfModule dir_module>

DirectoryIndex index.html

</IfModule>

<Files ".ht*">

Require all denied

</Files>

ErrorLog "logs/error_log"

LogLevel warn

<IfModule log_config_module>

LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\"" combined

LogFormat "%h %l %u %t \"%r\" %>s %b" common

<IfModule logio_module>

LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\" %I %O" combinedio

</IfModule>

CustomLog "logs/access_log" combined

</IfModule>

<IfModule alias_module>

ScriptAlias /cgi-bin/ "/var/www/cgi-bin/"

</IfModule>

<Directory "/var/www/cgi-bin">

AllowOverride None

Options None

Require all granted

</Directory>

<IfModule mime_module>

TypesConfig /etc/mime.types

AddType application/x-compress .Z

AddType application/x-gzip .gz .tgz

AddType text/html .shtml

AddOutputFilter INCLUDES .shtml

</IfModule>

AddDefaultCharset UTF-8

<IfModule mime_magic_module>

MIMEMagicFile conf/magic

</IfModule>

EnableSendfile on

IncludeOptional conf.d/*.conf

<VirtualHost *:80>

ProxyRequests on

ProxyPass / http://localhost:8443/ retry=0 acquire=1000 timeout=600 Keepalive=On

ProxyPassReverse / http://localhost:8443

</VirtualHost>

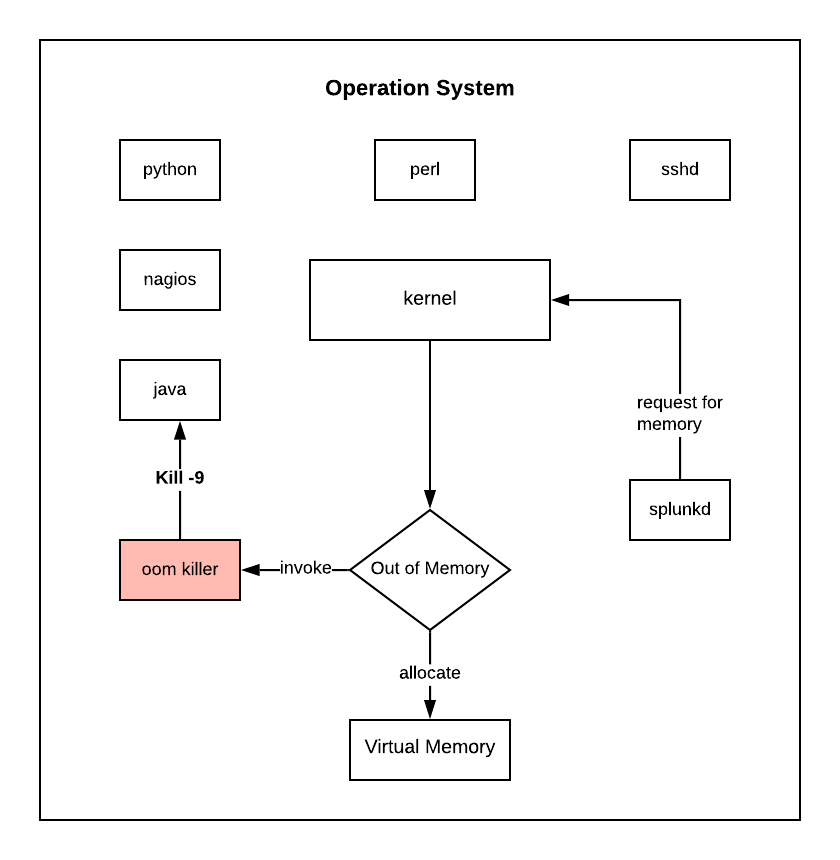

Regarding OS, the below experiment was done on CentOS.

Node.js Backlog

What is the default backlog of the node process?

If there is a blocking thread executing in Node.js, how many requests can be kept in the backlog?

In Unix-based systems, the max number of connections that can be kept in backlog at a socket is based on the below setting.

➜ ~ more /proc/sys/net/core/somaxconn

128

For example — 131 (blocking) requests are sent to Node.js using below command.

➜ ~ seq 1 131 | xargs -P 131 -I % sh -c 'time curl localhost:8443/block; echo %'

Using netstat command, we can see that 131 requests came to 8443 port.

# netstat -antp | grep "::1:8443" | grep -v curl | wc -l

131

Out of those one got established with node.

# netstat -antp | grep "::1:8443" | grep "ESTABLISHED.*node" | wc -l

1

129 requests connections waiting to 8443 port to connect to node and 1 request is on SYN state.

# netstat -antp | grep "::1:8443" | grep "ESTABLISHED -" | wc -l

129

# netstat -antp | grep "::1:8443" | grep 'SYN' | grep -v curl | wc -l

1

SYN retries are based on below settings

➜ ~ more /proc/sys/net/ipv4/tcp_synack_retries

5

➜ ~ more /proc/sys/net/ipv4/tcp_syn_retries

6

SYN connections timeout can happen in ~100 seconds. After retries, the connection reset will be sent by the server

131st request got TCP RST which led to Connection reset by peer after 102 seconds

curl: (56) Recv failure: Connection reset by peer

real 1m42.688s

user 0m0.003s

sys 0m0.003s

131

To fix the above issue, we can increase backlog on a socket

sysctl -w net.core.somaxconn=1024

To make above setting permanent add below line to /etc/sysctl.conf

net.core.somaxconn=1024

Run sysctl -p or restart to reflect the above setting.

After doing the above setting, if 1000 blocking requests are sent to the node server

➜ ~ seq 1 1000 | xargs -P 1000 -I % sh -c 'time curl localhost:8443/block; echo %'

Only 512 requests are in backlog

# netstat -antp | grep "::1:8443" | grep "ESTABLISHED -" | wc -l

512

After 105 seconds, 513th request got Connection reset by peer

➜ ~ seq 1 1000 | xargs -P 1000 -I % sh -c 'time curl localhost:8443/block; echo %'

curl: (56) Recv failure: Connection reset by peer

curl: (56) Recv failure: Connection reset by peer

real 1m45.306s

user 0m0.005s

sys 0m0.011s

513

Only 512 are in the backlog because the default backlog setting on the node server is 511.

Node default backlog can be increased by passing backlog parameter in listen method like below.

const server = app.listen(8443,"localhost",1024, () => console.log('app listening on port 8443'));

After the above setting, when 1000 blocking requests are made, 999 requests are kept in the backlog.

# netstat -antp | grep "127.0.0.1:8443" | grep "ESTABLISHED -" | wc -l

999

Connection timeout

If 131 non-blocking requests are sent to node

➜ ~ seq 1 131 | xargs -P 131 -I % sh -c 'time curl localhost:8443/non-block; echo %'

131 requests came to 8443 port

# netstat -antp | grep "::1:8443" | grep -v curl | wc -l

131

Out of those, all 131 got established with node.

# netstat -antp | grep "::1:8443" | grep "ESTABLISHED.*node" | wc -l

131

0 requests are waiting in SYN backlog & 0 requests are waiting on 8443 port to get connections

# netstat -antp | grep "::1:8443" | grep 'SYN' | grep -v curl | wc -l

0

# netstat -antp | grep "::1:8443" | grep "ESTABLISHED -" | wc -l

0

After 2 minutes, 1st request got disconnected

curl: (52) Empty reply from server

real 2m0.093s

user 0m0.006s

sys 0m0.017s

1

Because Node.js, by default, has 2 minutes timeout, after that, a connection will be terminated.

The above timeout can be increased by using setTimeout method.

server.setTimeout(5000000);

Note: Timeout will happen only when the request is kept in event loop. If a (blocking) request is currently executing in Node.js will have no effect on this setting.

Apache

Proxying to node

Proxying requests to a node from Apache using mod_proxy

ProxyRequests on

ProxyPass / http://localhost:8443/ retry=0 acquire=1000 timeout=600 Keepalive=On

ProxyPassReverse / http://localhost:8443

notice timeout setting — when Apache forwards the request to node, it waits for 600 seconds (10 mins) before terminating.

Having fine-tuned Node.js, lets look at Apache configurations.

502 Proxy error

By default net.core.somaxconn set to 128. When 131 blocking requests are sent through httpd to Node.js using the below command.

➜ ~ seq 1 131 | xargs -P 131 -I % sh -c 'time curl localhost/block; echo %'

131 requests established with httpd on port 80

# netstat -antp | grep "::1:80" | grep "ESTABLISHED.*httpd" | wc -l

131

131 requests forwarded to Node.js 8443

# netstat -antp | grep "::1:8443" | grep "ESTABLISHED.*httpd" | wc -l

131

1 is SYN backlog & 129 requests connections waiting to 8443 port to get connections, 1 request is with node process.

131st request got TCP RST from Node.js, Apache sent 502 Proxy Error to client.

<!DOCTYPE HTML PUBLIC "-//IETF//DTD HTML 2.0//EN">

<html><head>

<title>502 Proxy Error</title>

</head><body>

<h1>Proxy Error</h1>

<p>The proxy server received an invalid

response from an upstream server.<br />

The proxy server could not handle the request <em><a href="/block">GET /block</a></em>.<p>

Reason: <strong>Error reading from remote server</strong></p></p>

</body></html>

real 1m50.354s

user 0m0.006s

sys 0m0.001s

131

Note: Apache will send 502 Proxy Error when SIGTERM (kill -3) or SIGKILL (kill -9) or restart is done on Node.js.

Server limit

When 512 non-blocking requests are sent to Apache, only 256 requests are sent to Node.js.

Note: SYN connections timeout can be ~100 seconds. After certain retries the connection reset will be send by Apache server

➜ ~ seq 1 512 | xargs -P 512 -I % sh -c 'time curl localhost/non-block; echo %'

curl: (56) Recv failure: Connection reset by peer

curl: (56) Recv failure: Connection reset by peer

real 1m49.746s

user 0m0.007s

sys 0m0.002s

385

Why only 256 requests are sent to Node.js? by default, the ServerLimit is 256, so Apache can create only 256 processes. (one process for each request).

This can be increased by adding below configuration in Apache httpd.conf

<IfModule mpm_prefork_module>

StartServers 100

MinSpareServers 50

MaxSpareServers 80

ServerLimit 1300

MaxClients 1300

MaxRequestsPerChild 0

</IfModule>

Apache Backlog

After increasing the socket backlog by increasing net.core.somaxconn to 1024 When 1000 requests are sent to Apache, Apache only sends 512 requests.

Apache has, by default, have a backlog of 511 requests. This can be increased by adding the below setting in httpd.conf configuration

ListenBacklog 1023

I hope this helps find any bottlenecks at Node.js, Apache, and OS. – RC

Comments